Opening up the conversation: reflections from the AI Hub’s official launch

In one word, how do you feel about AI in Social Protection? This was the opening question posed to participants at the official launch of the AI Hub of the Digital Convergence Initiative (DCI) on December 2, 2025. The results were revealing: words like ‘excited’, ‘intrigued’, and ‘game-changer’ featured prominently – but so did ‘fear’, ‘regulated’, and ‘wary’. This enthusiasm tempered by a degree of apprehension set the tone for a stimulating afternoon of discussion.

Moderated by social protection expert Valentina Barca, the event brought together 127 participants from governments, civil society, academia and international organizations, and comprised a rich mix of presentations, panel discussions, and a ‘sneak peek’ into the foundational knowledge products soon to be published by the AI Hub.

The AI Hub: balancing the promise and pitfalls of AI in social protection

Whether we love it or hate it, AI is rapidly becoming an important driver of digital transformation in social protection systems around the world. Multiple, overlapping crises are driving governments – particularly in low- and middle-income countries – to explore AI innovation as a means to deliver more targeted social protection benefits to more people, with fewer available resources. The policy challenge is no longer whether AI will be used, but how, on what terms, and in whose interests. How to continue innovating and expanding what AI can deliver for social protection, while ensuring ethical, legal, and human rights risks are actively identified and addressed? Navigating this tension requires more than technical solutions; it calls for informed policy choices, clear frameworks, and strengthened institutional capacities.

And this is where the AI Hub comes in. Ralf Radermacher, Head of the Section, Health, Social Protection and Digitalization at Gesellschaft für Internationale Zusammenarbeit (GIZ), explained that the AI Hub was created to support governments and social protection institutions navigate just such challenges, saying,

We are not enthusiasts who want to bring AI into social protection for the sake of technology. But we do believe AI can make an important contribution.

The Hub is already helping a growing number of partner countries to develop the capacities needed to use AI in ways that are responsible, risk-aware, and adapted to national contexts.

Concerns about trust should not derail AI innovation

Drawing on Dataprev’s more than 50 years of experience managing Brazil’s social security data, the organisation’s CEO, Rodrigo Assumpção, contended that the trust issues raised by AI are not new and have in fact long been an issue for public policy. What AI does change is the speed and scale – both of the outcomes and also of potential errors. But, as Mr Assumpção pointed out, ‘we are faster to make the mistakes, but we can also be faster to correct them.’

AI should therefore be treated like any other public policy intervention – requiring robust governance, clear accountability, and continuous evaluation. None of this is possible, however, without good-quality, consolidated, and interoperable data systems, which form the foundation for effective AI use and are essential for building public trust.

Raul Ruggia-Frick, Director of Social Security Development at AI Hub partner organization ISSA, echoed this perspective, pointing out that AI technologies complement the work that social protection institutions are already doing (as recently documented in the ISSA publication on AI applications in social security). Pioneering institutions are building on lessons from early AI applications to develop more advanced models that not only integrate better with existing systems but can be adapted more effectively to national contexts. The AI Hub is playing a critical role in translating innovation at the frontiers of AI into practical, policy-relevant guidance for social protection systems.

A human rights based approach to AI in social protection is critical

Bringing a human rights lens to the discussion, Teresa Barrio Traspaderne, Researcher on Technology and Economic, Social and Cultural Rights at Amnesty Tech offered a stark reminder of what happens when efficiency is prioritised over rights, also drawing on their most recent publication, the Algorithmic Accountability Toolkit.

In social protection, AI algorithms are increasingly used to determine eligibility, flag potential risks, and trigger fraud investigations. Errors or bias in these systems are not simply statistical problems but can have devastating human consequences. A number of high-profile cases illustrate what happens when AI algorithms wrongfully target specific groups or exclude the very people social protection systems are designed to assist – denying them access to essential benefits such as income support or healthcare, often in times of crisis.

In tune with the approach of the AI Hub, Amnesty is calling for the adoption of a rights-based framework based on the three key principles of transparency, participation and accountability, along with impact assessments that meaningfully involve affected populations, including social protection beneficiaries and users of the AI.

Raphaël Duteau, Manager of AI & Data Ethics at AI Hub partner organization Employment and Social Development Canada (ESDC), pointed to the need for large-scale quantitative analysis in order to assess fairness in AI models that are used nationwide. In Canada, such analysis revealed that an AI model had failed to identify a key group of social protection beneficiaries – fishermen living in coastal areas.

Shaping responsible AI – a ‘sneak-peek’ at the Hub’s foundational knowledge products

As the pressure to introduce AI in social protection grows, governments are required to make high-stakes decisions on AI in the absence of shared definitions, comparable evidence, or practical guidance that can be tailored to their national context. Building consensus on what responsible, innovative, and sovereign AI adoption looks like for social protection is therefore an urgent priority.

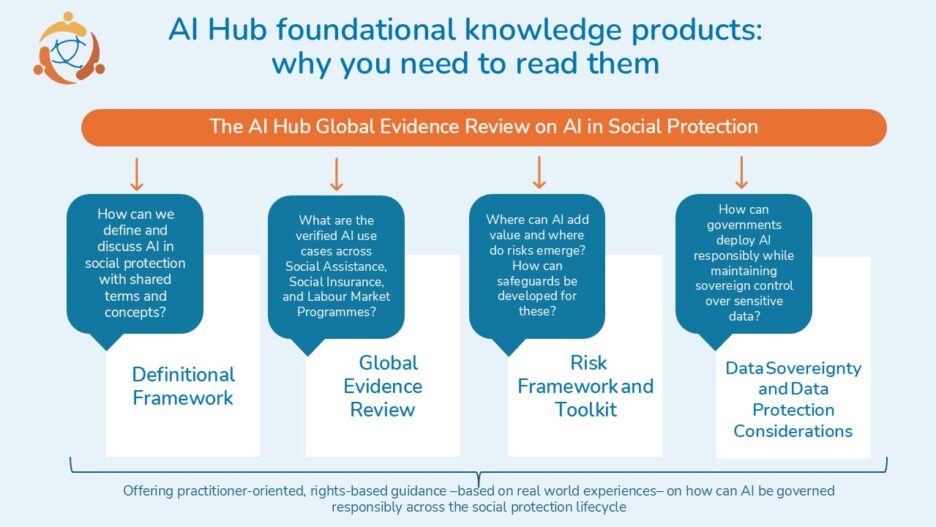

One of the ways in which the AI Hub is closing this gap is through the development of four foundational knowledge products, being developed by a high-level team of social protection and AI technology specialists. During the launch event, Thomas Byrnes, CEO of MarketImpact, gave a ‘sneak peek’ into these.

A global evidence review, focused on low- and middle-income countries, identified 99 distinct AI use cases across 44 countries. Alongside key informant interviews, this review has informed the development of a definitional framework or taxonomy, providing a shared set of AI terms and concepts that apply across social protection use cases.

Two further reports examine critical governance questions: the first analyses the potential risks and safeguards associated with using AI in social protection and provides a rights-based framework and practical toolkit for practitioners, while the second focuses on data protection via a lense of data sovereignty.

These knowledge products provide practical guidance for governments and social protection practitioners in making informed, context-appropriate decisions on whether, where, and how AI should be applied.

From principles to practice: supporting Morocco’s AI journey

The AI Hub is already putting this research and analysis into practice through its collaboration with Morocco’s National Agency for Social Support (ANSS), which delivers social assistance to some 12 million citizens. With data flowing in from multiple systems, explained Hajar Khyati, the agency’s Director of Digitalization and Information Systems, ANSS is exploring how AI can help improve service delivery, not only by organising the large volumes of data, but by transforming them into better policy-making, program design, and clearer information for citizens.

ANSS now seeks to define a clear roadmap for AI adoption, starting with low-impact use cases and moving gradually towards higher-impact applications. At the same time, the Government wants to strengthen digital governance arrangements and build internal AI and broader digital capacities.

Illustrating its commitment to country-led, responsible, and sovereign AI adoption, the AI Hub is working with ANSS to review the legislative and regulatory environment for AI, co-develop a comprehensive AI strategy aligned with national priorities, and provide technical assistance to prototype a selected AI use case.

A call to the AI and social protection communities: join the conversation

The official launch of the AI Hub marks the beginning of a broader conversation – one that will help refine the Hub’s offer, strengthen its knowledge products, and build a growing evidence base for the responsible and ethical use of AI in social protection.

The knowledge products will be published in Q1 2026. They are ‘living documents’ that will be updated as new evidence and best practices emerge. The AI Hub and its partners are actively seeking feedback from governments, development partners, and practitioners.

If you are a…

Policy maker or social protection institution seeking guidance on whether and how to integrate AI in your social protection sector

The AI Hub can provide you with targeted, time-bound technical assistance. Engagements begin with a needs assessment and use-case scoping, followed by joint design of solutions and prototyping.

To express interest or request support, please fill in the request form and email this to the AI Hub at: contact@spdci.org

Our flyer also contains further information on the AI Hub support process.

Development partner or global institution interested in working with the AI Hub, or linking your partners with the AI Hub

Your engagement could help shape the next phase of the AI Hub’s work to support responsible, well-governed, and sovereign AI adoption in the social protection systems of partner countries.

Please visit our website at https://spdci.org/ai-hub/

We welcome your insights.

Author: Corinne Grainger